link

- A Pilot Study using Covert Visuospatial Attention as an EEG-based Brain Computer Interface to Enhance AR Interaction, ISWC 21 [[3460421.3480420.pdf]]

- Attention-driven Interaction Systems for Augmented Reality, ICMI, 19 [[vortmann2019DC_ICMI.pdf]]

- Telepathic Play: Towards Playful Experiences Based on Brain-to-brain Interfacing, CHI PLAY 21 [[3450337.3483468.pdf]]

- The Future of Emotion in Human-Computer Interaction, CHI EA 22 [[3491101.3503729.pdf]]

- Introduction to real time user interaction in virtual reality powered by brain computer interface technology, SIGGRAPH 20 [[jo2020.pdf]]

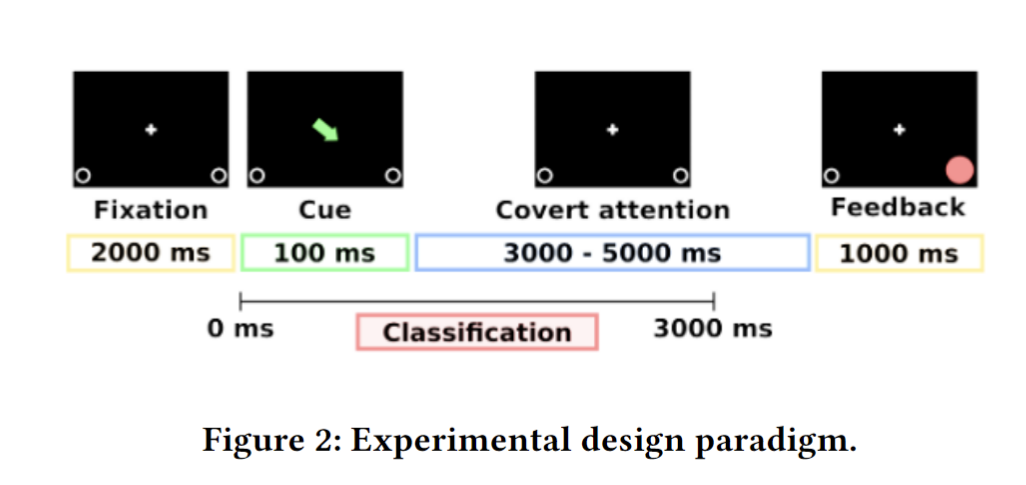

[ISWC'21]A Pilot Study using Covert Visuospatial Attention as an EEG-based Brain Computer Interface to Enhance AR Interaction¶

- covert visuospatial attention ~ EEG-based BCI => AR interaction

Threefold connection between BCI and AR HMD:

- worn on the head

- HMDs require hands-free interaction, which BCIs can provide

- AR HMDs have voice and/or eye gaze modalities for interaction, which can compensate for classification errors that occur during BCI use.

classification errors

Classification errors refer to instances in machine learning where a model incorrectly assigns a data point to the wrong class or category. These errors can be false positives (misclassifying something as belonging to a class when it doesn't) or false negatives (failing to classify something correctly).

problems of the past: separated BCI and AR HMD => can work simultaneously

Summary

Work: - ~70% accuracy of classification - gaze shift: little impact & low correlation(independent BCI)

Highlights: - pilot model of AR EEG-BCIs based on CVSA(covert visuospatial attention) - Covert (no need of overt eye movements => CLIS(complete locked-in syndrome) patients) - no stimuli => more sutable for long-time use

[ICMI'19] Attention-driven Interaction Systems for Augmented Reality¶

Key words: attention, AR, BCI, eye tracking

Attention : figure out a reliable estimation of user's attentional state - eye tracking: capture spatial information about visual attention - Electroencephalography(EEG): capture internal processes and context switches - context information: yields important apriori information about likely targets of attention

apriori

state of attention

- internal

- virtual- external

- real- external

work: models based on multimodal biosignal data that classify the available information to estimate a user's attentional state

highlights of the work: (real-time adaptive system for AR)

说人话就是: 通过特定手段分析用户此时的注意力集中在什么信息上, 然后据此adapt AR的UI做到: 1. 减少过多信息的显示 2. 强调关键信息

场景比如: 用户头戴AR上课, 走神了, 那么可以: 1. 屏蔽无关信息源 2. 将黑板上的内容highlight

应用场景举例:

assemble different pieces of smart-home furniture

- AR guidance about the process

- 用户跟不上AR的引导: 调整salience and timing

- pause instructions when interrupted

- 走神提醒

blending of real and virtual objects => visual complexity => - 如果AR的交互设计好 => increase attention allocation - otherwise, decrease

Telepathic(心灵感应的) Play: Towards Playful Experiences Based on Brain-to-brain Interfacing, CHI PLAY 21¶

"心有灵犀": EEG input on A ---> impact on B via tES stimulations, "感同身受"

highlights of the work: direct BBI systems in the field of interactive art

BBI: Brain-to-Brain interface

过去: - BCIs can only read, but not directly impact on others - indirect interactions: presenter → environment → viewer 现在: - direct interaction: presenter → viewer; - BCI as input(brainwave) & output(tES: transcranial electrical stimulation经头颅电刺激)

bold, little scientific progress but tremendous impact on effectiveness(interpersonal expressions)

kind of romantic

This paper focused more on the experience of interfacing. It relates more to the humans rather than to the scientific theories & processes

- closer connectedness

- ambiguous information transmission: mind guess experience

- sense of control over others' minds

*第2点其实是从一个积极的角度看待该模式的局限性: 虽然交流的信息复杂度提高了(不仅是0/1, 可以提供多种的stimulation), 但是还不足以完成有效的"理解他人", 因此就像是"你画我猜"一样. 但是本文认为这种局限性导致的"guessing others' mind"有趣.

- ethical problems

The Future of Emotion in Human-Computer Interaction, CHI EA 22¶

workshop?

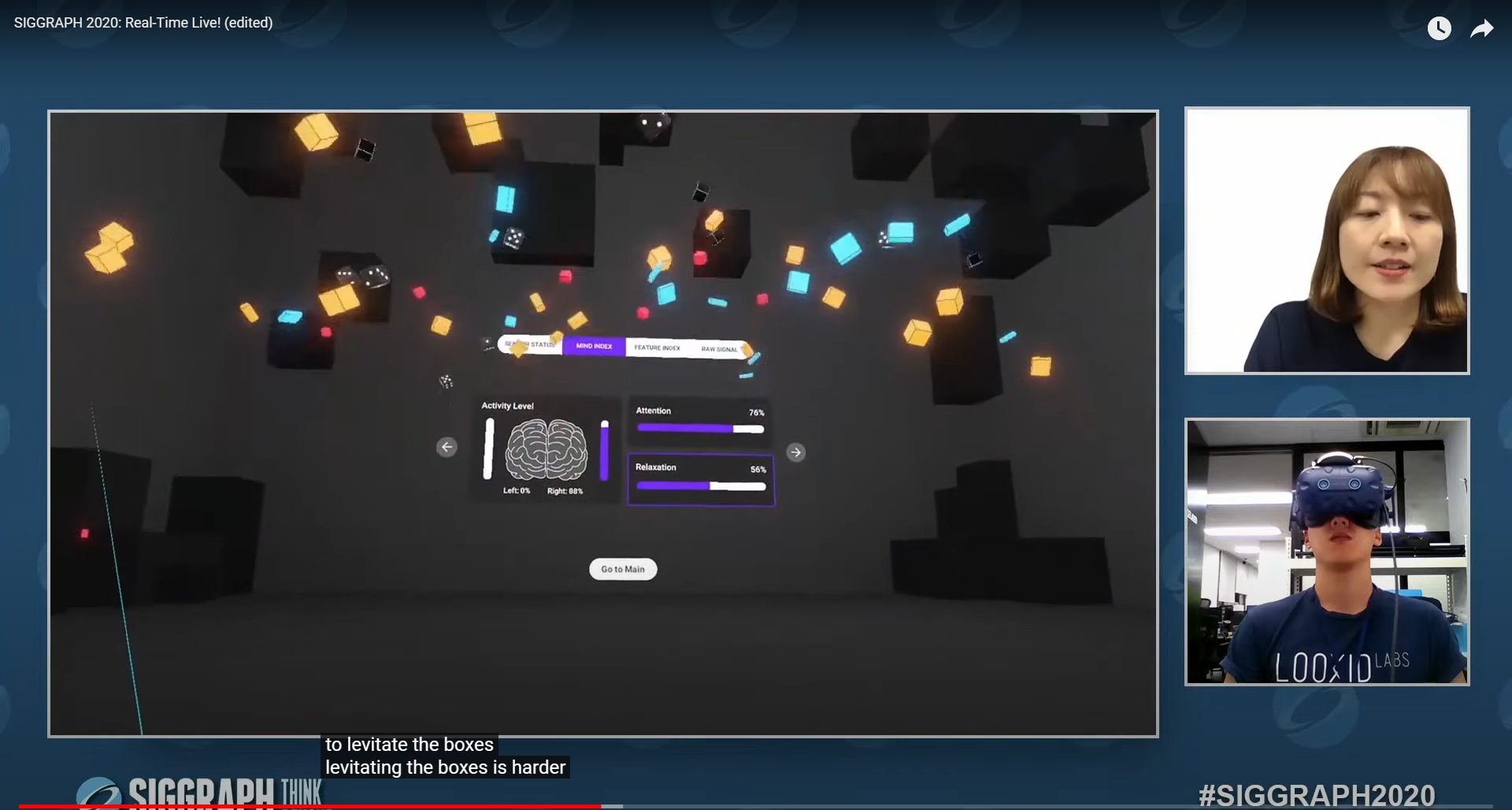

Introduction to real time user interaction in virtual reality powered by brain computer interface technology, SIGGRAPH 20¶

SIGGRAPH 2020: Real-Time Live! (edited) - YouTube25:07 - 40:18

- loocidlink: brainwave module

- attention & relaxation

- application1: mind care(meditation session that helps user relax)

图中的环的颜色会根据使用者的心情变化

- app2: training room (attention)

- 使用者通过想象来产生VR物体(立方体)

- 当使用者专注于这些立方体时, 它们会被升起, 否则, 它们会落下

- app3: mind master

work: applications that connect mind with AR (attention)